Dr. Geovanni Martinez, coordinator of the IPCV-LAB, will spend 3.5 months as a visiting researcher in Section 347 of the NASA Jet Propulsion Laboratory (

NASA/JPL Robotics) at the California Institute of Technology (

Caltech), Pasadena, California, USA, starting in October 2024.

NASA/JPL is the leading center in space exploration with unmanned spacecraft and robots.

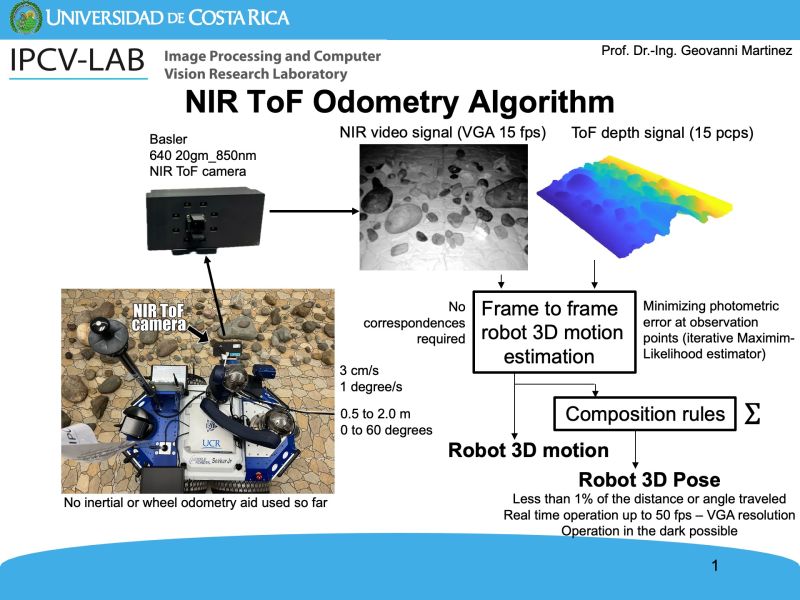

Specifically, the stay has two objectives. The first is to test on NASA/JPL robots an algorithm that Dr. Martinez has been researching for more than a decade at the IPCV-LAB, capable of determining the three-dimensional (3D) motion, position and orientation of robots by processing two multidimensional signals, a monocular near-infrared (NIR) video signal and a time-of-flight (ToF) depth signal, both provided by a monocular NIR ToF camera rigidly attached to the side of the robot tilted towards the ground. These algorithms are known as NIR ToF odometry algorithms.

Odometry algorithms are very important for exploration robots in places where there is no GPS, such as on the Moon or Mars, as they are able to provide the robot with information about its 3D motion, position and orientation at all times, knowledge that is used by the robots to detect if they are deviating from the trajectories towards the scientific targets, previously received from Earth or planned by the robots themselves at the exploration sites. If they detect deviations, the robots must initiate the necessary control actions to modify their courses and return and stay on the planned trajectories, otherwise they could get into places from which they could not get out without damaging their structures, jeopardizing an entire day of exploration or even an entire mission.

Dr. Martinez's NIR ToF odometry algorithm determines the 3D position and orientation of the robot by integrating the 3D motion of the robot over time using composition rules, where the motion is estimated by minimizing the linear photometric error measured at observation points between consecutive NIR images, by applying an iterative Maximum Likelihood estimator, where the 3D position of the observation points is obtained from the ToF depth provided by the camera. The algorithm has proven to be quite accurate, with absolute position and orientation errors of less than 1 percent of the distance and angle traveled, respectively; it operates in real time and can process up to 50 fps at VGA resolution on a conventional laptop; moreover, since it processes NIR images, it is much less sensitive to local illumination changes due to moving shadows and can operate in the dark.

For more information on Dr. Martinez's NIR ToF odometry algorithms read the following recently published article:

Geovanni Martinez, “Real-time robot 3D pose computation from NIR imagery and ToF depth maps for space applications”, Trends and Challenges in Multidisciplinary Research for Global Sustainable Development, Book series: Lecture Notes in Networks and Systems, vol 965, Springer, Cham, pp. 15-27, 2024. DOI:

https://doi.org/10.1007/978-3-031-57620-1_2

The second objective of the stay is to go beyond previous research conducted at IPCV-LAB by collaborating with robotics specialists at NASA/JPL on new research of mutual interest in the area of estimation of robot 3D motion, position and orientation, not only using NIR ToF cameras but also other sensors.